However on Wednesday, June 10, Amazon shocked civil rights activists and researchers when it introduced that it could place a one-year moratorium on police use of Rekognition. The transfer adopted IBM’s determination to discontinue its general-purpose face recognition system. The subsequent day, Microsoft introduced that it could stop selling its system to police departments till federal regulation regulates the expertise. Whereas Amazon made the smallest concession of the three corporations, additionally it is the largest supplier of the expertise to regulation enforcement. The determination is the fruits of two years of analysis and exterior stress to exhibit Rekognition’s technical flaws and its potential for abuse.

“It’s unimaginable that Amazon’s really responding inside this present dialog round racism,” stated Deborah Raji, an AI accountability researcher who coauthored a foundational examine on the racial biases and inaccuracies constructed into the firm’s expertise. “It simply speaks to the energy of this present second.”

“A 12 months is a begin,” says Kade Crockford, the director of the expertise liberty program at the ACLU of Massachusetts. “It’s completely an admission on the firm’s half, at the very least implicitly, that what racial justice advocates have been telling them for 2 years is appropriate: face surveillance expertise endangers Black and brown folks in the United States. That’s a exceptional admission.”

Two years in the making

In February of 2018, MIT researcher Pleasure Buolamwini and Timnit Gebru, then a Microsoft researcher, revealed a groundbreaking examine known as Gender Shades on the gender and racial biases embedded in industrial face recognition programs. At the time, the examine included the programs bought by Microsoft, IBM, and Megvii, considered one of China’s largest face recognition suppliers. It didn’t embody Amazon’s Rekognition.

Nonetheless, it was the first examine of its type, and the outcomes have been surprising: the worst system, IBM’s, was 34.four share factors worse at classifying gender for dark-skinned ladies than light-skinned males. The findings instantly debunked the accuracy claims that the corporations had been utilizing to promote their merchandise and sparked a debate about face recognition generally.

As the debate raged, it quickly turned obvious that the drawback was additionally deeper than skewed coaching knowledge or imperfect algorithms. Even when the programs reached 100% accuracy, they might nonetheless be deployed in harmful methods, many researchers and activists warned.

COURTESY OF ALGORITHMIC JUSTICE LEAGUE

“There are two ways in which this expertise can harm folks,” says Raji who labored with Buolamwini and Gebru on Gender Shades. “A method is by not working: by advantage of getting greater error charges for folks of coloration, it places them at higher danger. The second state of affairs is when it does work—the place you’ve gotten the excellent facial recognition system, but it surely’s simply weaponized towards communities to harass them. It’s a separate and linked dialog.”

“The work of Gender Shades was to expose the first state of affairs,” she says. In doing so, it created a gap to expose the second.

Amazon tried to discredit their analysis; it tried to undermine them as Black ladies who led this analysis.

Meredith Whittaker

That is what occurred with IBM. After Gender Shades was revealed, IBM was considered one of the first corporations that reached out to the researchers to determine how to repair its bias issues. In January of 2019, it launched an information set known as Range in Faces, containing over 1 million annotated face photos, in an effort to make such programs higher. However the transfer backfired after folks found that the photos have been scraped from Flickr, citing problems with consent and privateness. It triggered one other sequence of inner discussions about how to ethically practice face recognition. “It led them down the rabbit gap of discovering the multitude of points that exist with this expertise,” Raji says.

So in the end, it was no shock when the firm lastly pulled the plug. (Critics level out that its system didn’t have a lot of a foothold in the market anyway.) IBM “simply realized that the ‘advantages’ have been by no means proportional to the hurt,” says Raji. “And on this explicit second, it was the proper time for them to go public about it.”

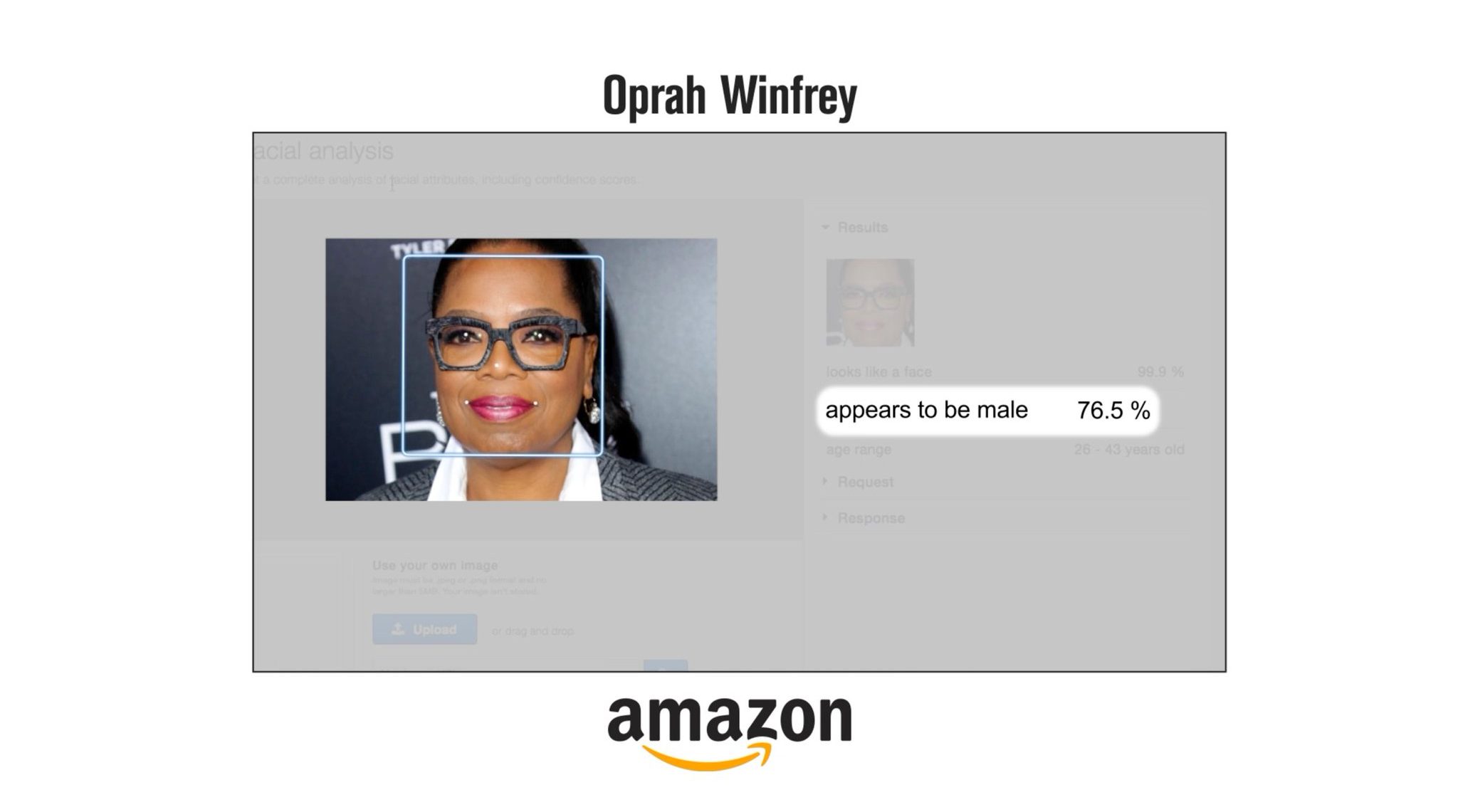

However whereas IBM was responsive to exterior suggestions, Amazon had the reverse response. In June of 2018, in the midst of all the different letters demanding that the firm stop police use of Rekognition, Raji and Buolamwini expanded the Gender Shades audit to embody its efficiency. The outcomes, revealed half a 12 months later in a peer-reviewed paper, as soon as once more discovered big technical inaccuracies. Rekognition was classifying the gender of dark-skinned ladies 31.four share factors much less precisely than that of light-skinned males.

In July, the ACLU of Northern California additionally carried out its personal examine, discovering that the system falsely matched pictures of 28 members of the US Congress with mugshots. The false matches have been disproportionately folks of coloration.

Moderately than acknowledge the outcomes, nevertheless, Amazon revealed two weblog posts claiming that Raji and Buolamwini’s work was deceptive. In response, practically 80 AI researchers, together with Turing Award winner Yoshua Bengio, defended the work and but once more known as for the firm to stop selling face recognition to the police.

“It was such an emotional expertise at the time,” Raji remembers. “We had executed a lot due diligence with respect to our outcomes. After which the preliminary response was so instantly confrontational and aggressively defensive.”

“Amazon tried to discredit their analysis; it tried to undermine them as Black ladies who led this analysis,” says Meredith Whittaker, cofounder and director of the AI Now Institute, which research the social impacts of AI. “It tried to spin up a story that they’d gotten it flawed—that anybody who understood the tech clearly would know this wasn’t an issue.”

The transfer actually put Amazon in political hazard.

Mutale Nkonde

In truth, because it was publicly dismissing the examine, Amazon was beginning to put money into researching fixes behind the scenes. It employed a equity lead, invested in an NSF analysis grant to mitigate the points, and launched a brand new model of Rekognition a couple of months later, responding instantly to the examine’s considerations, Raji says. At the identical time, it beat again shareholder efforts to droop gross sales of the expertise and conduct an impartial human rights evaluation. It additionally spent tens of millions lobbying Congress to keep away from regulation.

However then all the pieces modified. On Could 25, 2020, Officer Derek Chauvin murdered George Floyd, sparking a historic motion in the US to fight institutional racism and finish police brutality. In response, Home and Senate Democrats launched a police reform invoice that features a proposal to restrict face recognition in a regulation enforcement context, marking the largest federal effort ever to regulate the expertise. When IBM introduced that it could discontinue its face recognition system, it additionally despatched a letter to the Congressional Black Caucus, urging “a nationwide dialogue on whether or not and the way facial recognition expertise ought to be employed by home regulation enforcement companies.”

“I believe that IBM’s determination to ship that letter, at the time that very same legislative physique is contemplating a police reform invoice, actually shifted the panorama,” says Mutale Nkonde, an AI coverage advisor and fellow at Harvard’s Berkman Klein Heart. “Despite the fact that they weren’t an enormous participant in facial recognition, the transfer actually put Amazon in political hazard.” It established a transparent hyperlink between the expertise and the ongoing nationwide dialog, in a means that was troublesome for regulators to ignore.

A cautious optimism

However whereas activists and researchers see Amazon’s concession as a significant victory, in addition they acknowledge that the struggle isn’t over. For one factor, Amazon’s 102-word announcement was imprecise on particulars about whether or not its moratorium would embody regulation enforcement companies past the police, comparable to US Immigration and Customs Enforcement or the Division of Homeland Safety. (Amazon didn’t reply to a request for remark.) For one more, the one-year expiration can also be a crimson flag.

“The cynical a part of me says Amazon goes to wait till the protests die down—till the nationwide dialog shifts to one thing else—to revert to its prior place,” says the ACLU’s Crockford. “We will likely be watching intently to ensure that these corporations aren’t successfully getting good press for these latest bulletins whereas concurrently working behind the scenes to thwart our efforts in legislatures.”

Because of this activists and researchers additionally imagine regulation will play a important function transferring ahead. “The lesson right here isn’t that corporations ought to self-govern,” says Whittaker. “The lesson is that we want extra stress, and that we want rules that guarantee we’re not simply a one-year ban.”

The cynical a part of me says Amazon goes to wait till the protests die down…to revert to its prior place.

Kade Crockford

Critics say the stipulations on face recognition in the present police reform invoice, which solely bans its real-time use in physique cameras, aren’t practically broad sufficient to maintain the tech giants totally accountable. However Nkonde is optimistic: she sees this primary set of suggestions as a seed for added regulation to come. As soon as handed into regulation, they may develop into an essential reference level for different payments written to ban face recognition in different functions and contexts.

There’s “actually a bigger legislative motion” at each the federal and native ranges, she says. And the highlight that Floyd’s dying has shined on racist policing practices has accelerated its widespread assist.

“It actually shouldn’t have taken the police killings of George Floyd, Breonna Taylor, and much too many different Black folks—and a whole lot of hundreds of individuals taking to the streets throughout the nation—for these corporations to understand that the calls for from Black- and brown-led organizations and students, from the ACLU, and from many different teams have been morally appropriate,” Crockford says. “However right here we’re. Higher late than by no means.”